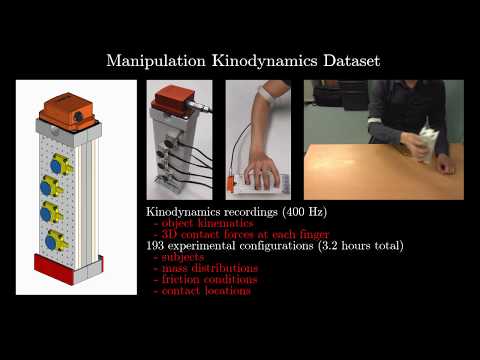

We consider the problem of estimating realistic contact forces during manipulation, backed with ground-truth measurements, using vision alone. Interaction forces are usually measured by mounting force transducers onto the manipulated objects or the hands. Those are costly, cumbersome, and alter the objects’ physical properties and their perception by the human sense of touch. Our work establishes that interaction forces can be estimated in a cost-effective, reliable, non-intrusive way using vision. This is a complex and challenging problem. Indeed, in multi-contact, a given motion can generally be caused by an infinity of possible force distributions. To alleviate the limitations of traditional models based on inverse optimization, we collect and release the first large-scale dataset on manipulation kinodynamics as 3.2 hours of synchronized force and motion measurements under 193 object-grasp configurations. We learn a mapping between high-level kinematic features based on the equations of motion and the underlying manipulation forces using recurrent neural networks (RNN). The RNN predictions are consistently refined using physics-based optimization through second-order cone programming (SOCP). We show that our method can successfully capture interaction forces compatible with both the observations and the way humans intuitively manipulate objects, using a single RGB-D camera.

Video

Reference

@article{tpami:pham:2017,

author={Pham, Tu-Hoa and Kyriazis, Nikolaos and Argyros, Antonis A and Kheddar, Abderrahmane},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

title={Hand-Object Contact Force Estimation From Markerless Visual Tracking},

year={2017},

volume={PP},

number={99},

pages={1-1},

keywords={Force sensing from vision;hand-object tracking;manipulation;pattern analysis;sensors;tracking},

doi={10.1109/TPAMI.2017.2759736},

ISSN={0162-8828},

month={},

}